c#-Kafka配置SASL_PLAINTEXT权限。常用操作命令,创建用户,topic授权

推荐 原创查看已经创建的topic

./bin/kafka-topics.sh --bootstrap-server localhost:9092 --list

创建topic

创建分区和副本数为1的topic

./bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --topic acltest --partitions 1 --replication-factor 1

![]()

创建kafka用户

创建writer用户

./bin/kafka-configs.sh --bootstrap-server localhost:9092 --alter -add-config 'SCRAM-SHA-256=[iterations=8192,password=pwd],SCRAM-SHA-512=[password=pwd]' --entity-type users --entity-name kafkawriter

![]()

创建reader用户

./bin/kafka-configs.sh --bootstrap-server localhost:9092 --alter -add-config 'SCRAM-SHA-256=[iterations=8192,password=pwd],SCRAM-SHA-512=[password=pwd]' --entity-type users --entity-name kafkareader

![]()

删除指定用户

./bin/kafka-configs.sh --zookeeper localhost:2181 --alter --delete-config 'SCRAM-SHA-512' --delete-config 'SCRAM-SHA-256' --entity-type users --entity-name admin

查看指定用户

./bin/kafka-configs.sh --bootstrap-server localhost:9092 --describe --entity-type users --entity-name kafkawriter

![]()

./bin/kafka-configs.sh --zookeeper localhost:2181 --describe --entity-type users --entity-name kafkareader

![]()

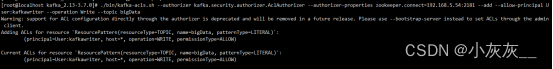

给topic授权

./bin/kafka-acls.sh --authorizer kafka.security.authorizer.AclAuthorizer --authorizer-properties zookeeper.connect=192.168.5.54:2181 --add --allow-principal User:kafkawriter --operation Write --topic bigData

./bin/kafka-acls.sh --authorizer kafka.security.authorizer.AclAuthorizer --authorizer-properties zookeeper.connect=192.168.5.54:2181 --add --allow-principal User:admin --operation Read --topic bigData

查看topic权限

./bin/kafka-acls.sh --authorizer kafka.security.authorizer.AclAuthorizer --authorizer-properties zookeeper.connect=192.168.5.54:2181 --list --topic bigData

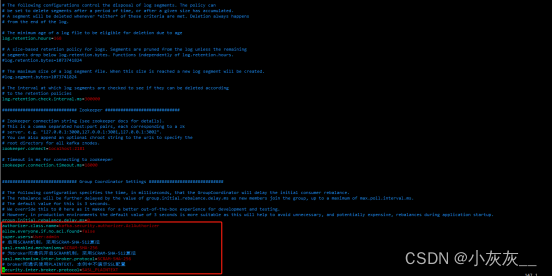

修改server.properties,开启sasl

listeners=SASL_PLAINTEXT://192.168.1.214:9092

advertised.listeners=SASL_PLAINTEXT://192.168.1.214:9092

authorizer.class.name=kafka.security.authorizer.AclAuthorizer

allow.everyone.if.no.acl.found=false

super.users=User:admin

# 启用SCRAM机制,采用SCRAM-SHA-512算法

sasl.enabled.mechanisms=SCRAM-SHA-256

# 为broker间通讯开启SCRAM机制,采用SCRAM-SHA-512算法

sasl.mechanism.inter.broker.protocol=SCRAM-SHA-256

# broker间通讯使用PLAINTEXT,本例中不演示SSL配置

security.inter.broker.protocol=SASL_PLAINTEXT

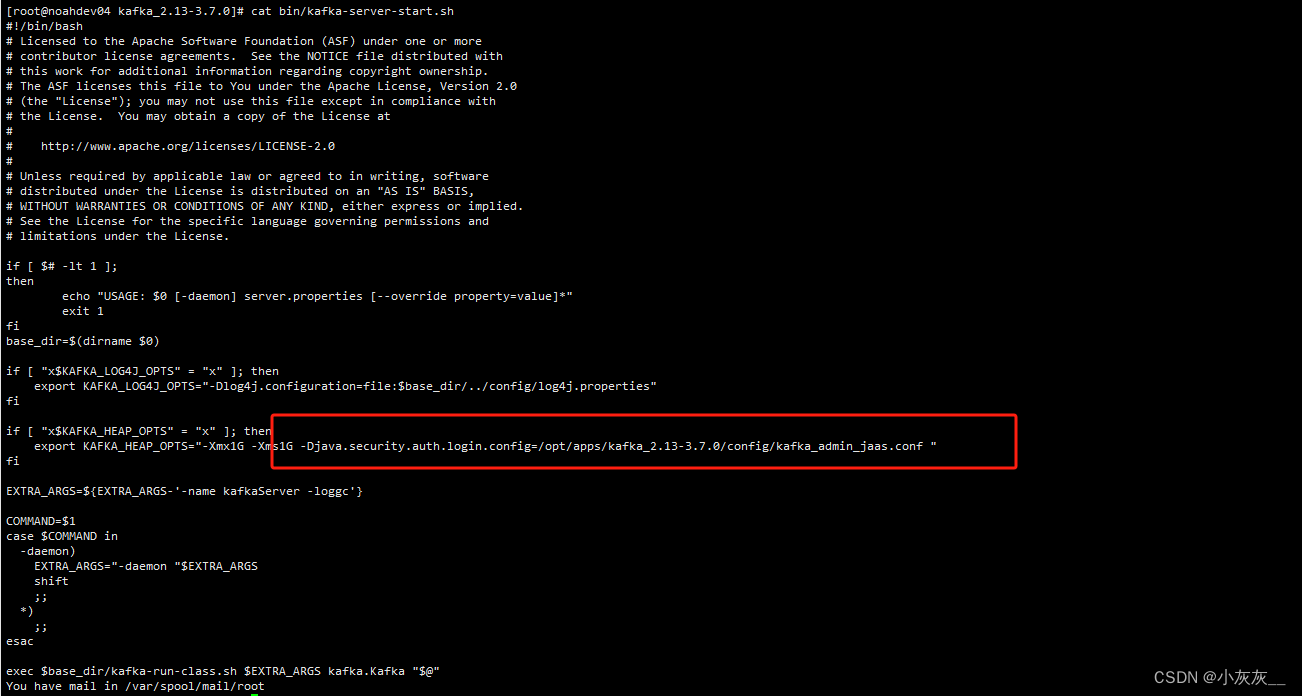

修改bin/kafka-server-start.sh启动脚本

-Djava.security.auth.login.config=/opt/apps/kafka_2.13-3.7.0/config/kafka_admin_jaas.conf

kafka_admin_jaas.conf 文件内容

KafkaServer {

org.apache.kafka.common.security.scram.ScramLoginModule required

username="admin"

password="pwd";

};

重启kafka服务

新建生产者配置

config/producer.conf

security.protocol=SASL_PLAINTEXT

sasl.mechanism=SCRAM-SHA-256

sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="kafkawriter" password="pwd";

![]()

给生产者授权

./bin/kafka-acls.sh --authorizer kafka.security.authorizer.AclAuthorizer --authorizer-properties zookeeper.connect=192.168.1.214:2181 --add --allow-principal User:kafkawriter --operation Write --topic bigData

![]()

生产者发送消息

./bin/kafka-console-producer.sh --broker-list 192.168.1.214:9092 --topic bigData --producer.config ./config/producer.conf

![]()

新建消费者配置

security.protocol=SASL_PLAINTEXT

sasl.mechanism=SCRAM-SHA-256

sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="kafkareader" password="pwd";

cat config/producer.conf

![]()

给消费者授权

./bin/kafka-acls.sh --authorizer kafka.security.authorizer.AclAuthorizer --authorizer-properties zookeeper.connect=192.168.1.214:2181 --add --allow-principal User:kafkareader --operation Read --topic bigData

授权group分组

./bin/kafka-acls.sh --authorizer kafka.security.authorizer.AclAuthorizer --authorizer-properties zookeeper.connect=192.168.1.214:2181 --add --allow-principal User:kafkareader --operation Read --topic bigData --group testgroup

消费者消费消息

./bin/kafka-console-consumer.sh --bootstrap-server 192.168.1.214:9092 --topic bigData --consumer.config ./config/consumer.conf --from-beginning --group testgroup

![]()

以上就是配置sasl的全过程了,kafka使用版本为kafka_2.13-3.7.0,文章中的ip地址经过变动,测试人员需保证ip地址一致

更多【c#-Kafka配置SASL_PLAINTEXT权限。常用操作命令,创建用户,topic授权】相关视频教程:www.yxfzedu.com

相关文章推荐

- python-Python(七) 元组 - 其他

- 编程技术-liunx的启动过程 - 其他

- rust-Rust教程7:Gargo包管理、创建并调用模块 - 其他

- c#-顶顶通语音识别使用说明 - 其他

- stm32-FPGA与STM32_FSMC总线通信实验 - 其他

- spring-springboot集成redis -- spring-boot-starter-data-redis - 其他

- ios-LibXL 4.2.0 for c++/net/win/mac/ios Crack - 其他

- unity-Unity DOTS系列之System中如何使用SystemAPI.Query迭代数据 - 其他

- 网络-openssl+SM2开发实例一(含源码) - 其他

- spring-Redis的内存淘汰策略分析 - 其他

- 单一职责原则-01.单一职责原则 - 其他

- 分布式-Java架构师分布式搜索词库解决方案 - 其他

- 电脑-京东数据分析:2023年9月京东笔记本电脑行业品牌销售排行榜 - 其他

- 云计算-什么叫做云计算? - 其他

- 编程技术-基于springboot+vue的校园闲置物品交易系统 - 其他

- 零售-OLED透明屏在智慧零售场景的应用 - 其他

- 交互-顶板事故防治vr实景交互体验提高操作人员安全防护技能水平 - 其他

- unity-原型制作神器ProtoPie的使用&Unity与网页跨端交互 - 其他

- python-Django知识点 - 其他

- 前端-js实现定时刷新,并设置定时器上限 - 其他

2):严禁色情、血腥、暴力

3):严禁发布任何形式的广告贴

4):严禁发表关于中国的政治类话题

5):严格遵守中国互联网法律法规

6):有侵权,疑问可发邮件至service@yxfzedu.com